基于矩阵分解的协同过滤算法

矩阵分解发展史

Traditional SVD:

通常 SVD 矩阵分解指的是 SVD(奇异值)分解技术,在这我们姑且将其命名为 Traditional SVD(传统并经典着)其公式如下:

Traditional SVD 分解的形式为 3 个矩阵相乘,中间矩阵为奇异值矩阵。如果想运用 SVD 分解的话,有一个前提是要求矩阵是稠密的,即矩阵里的元素要非空,否则就不能运用 SVD 分解。

很显然我们的数据其实绝大多数情况下都是稀疏的,因此如果要使用 Traditional SVD,一般的做法是先用均值或者其他统计学方法来填充矩阵,然后再运用 Traditional SVD 分解降维,但这样做明显对数据的原始性造成一定影响。

FunkSVD(LFM)

刚才提到的 Traditional SVD 首先需要填充矩阵,然后再进行分解降维,同时存在计算复杂度高的问题,因为要分解成 3 个矩阵,所以后来提出了 Funk SVD 的方法,它不在将矩阵分解为 3 个矩阵,而是分解为 2 个用户-隐含特征,项目-隐含特征的矩阵,Funk SVD 也被称为最原始的 LFM 模型

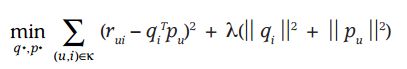

借鉴线性回归的思想,通过最小化观察数据的平方来寻求最优的用户和项目的隐含向量表示。同时为了避免过度拟合(Overfitting)观测数据,又提出了带有 L2 正则项的 FunkSVD,上公式:

以上两种最优化函数都可以通过梯度下降或者随机梯度下降法来寻求最优解。

BiasSVD:

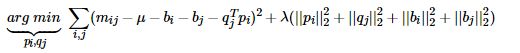

在 FunkSVD 提出来之后,出现了很多变形版本,其中一个相对成功的方法是 BiasSVD,顾名思义,即带有偏置项的 SVD 分解:

它基于的假设和 Baseline 基准预测是一样的,但这里将 Baseline 的偏置引入到了矩阵分解中

SVD++:

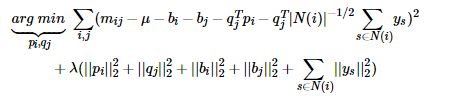

人们后来又提出了改进的 BiasSVD,被称为 SVD++,该算法是在 BiasSVD 的基础上添加了用户的隐式反馈信息:

显示反馈指的用户的评分这样的行为,隐式反馈指用户的浏览记录、购买记录、收听记录等。

SVD++是基于这样的假设:在 BiasSVD 基础上,认为用户对于项目的历史浏览记录、购买记录、收听记录等可以从侧面反映用户的偏好。

基于矩阵分解的 CF 算法实现(一):LFM

LFM 也就是前面提到的 Funk SVD 矩阵分解

LFM 原理解析

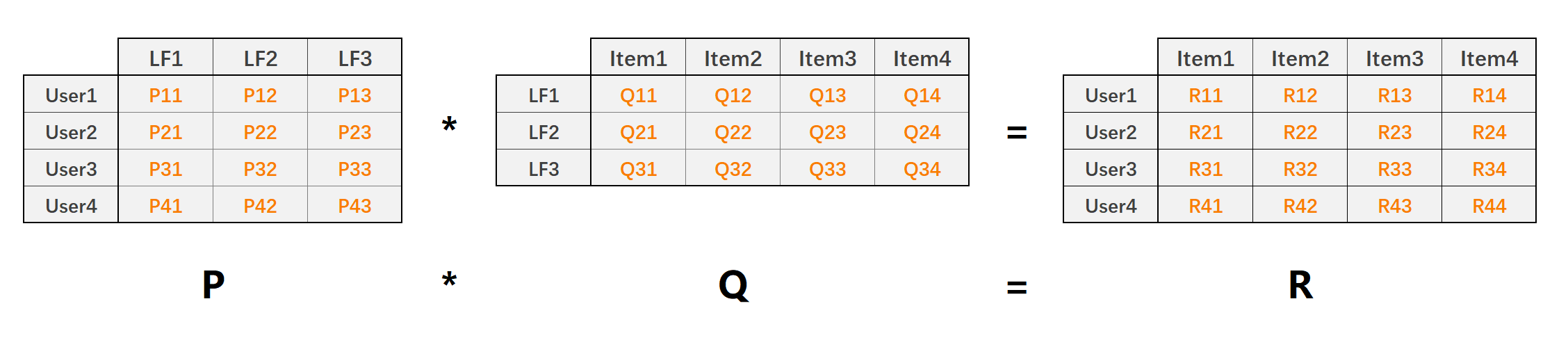

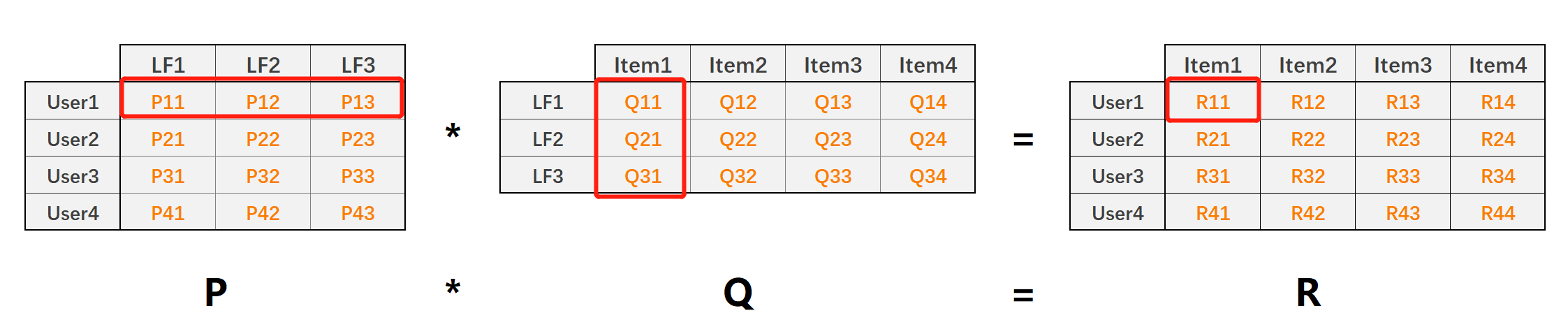

LFM(latent factor model)隐语义模型核心思想是通过隐含特征联系用户和物品,如下图:

- P 矩阵是 User-LF 矩阵,即用户和隐含特征矩阵。LF 有三个,表示共总有三个隐含特征。

- Q 矩阵是 LF-Item 矩阵,即隐含特征和物品的矩阵

- R 矩阵是 User-Item 矩阵,有 P*Q 得来

- 能处理稀疏评分矩阵

利用矩阵分解技术,将原始 User-Item 的评分矩阵(稠密/稀疏)分解为 P 和 Q 矩阵,然后利用

矩阵值

表示用户 1 对隐含特征 1 的权重值 矩阵值

表示隐含特征 1 在物品 1 上的权重值 矩阵值

就表示预测的用户 1 对物品 1 的评分,且

利用 LFM 预测用户对物品的评分,

因此最终,我们的目标也就是要求出 P 矩阵和 Q 矩阵及其当中的每一个值,然后再对用户-物品的评分进行预测。

损失函数

同样对于评分预测我们利用平方差来构建损失函数:

加入 L2 正则化:

对损失函数求偏导:

随机梯度下降法优化

梯度下降更新参数

同理:

随机梯度下降: 向量乘法 每一个分量相乘 求和

由于 P 矩阵和 Q 矩阵是两个不同的矩阵,通常分别采取不同的正则参数,如

算法实现

Click me to view the code

'''

LFM Model

'''

import pandas as pd

import numpy as np

# 评分预测 1-5

class LFM(object):

def __init__(self, alpha, reg_p, reg_q, number_LatentFactors=10, number_epochs=10, columns=["uid", "iid", "rating"]):

self.alpha = alpha # 学习率

self.reg_p = reg_p # P矩阵正则

self.reg_q = reg_q # Q矩阵正则

self.number_LatentFactors = number_LatentFactors # 隐式类别数量

self.number_epochs = number_epochs # 最大迭代次数

self.columns = columns

def fit(self, dataset):

'''

fit dataset

:param dataset: uid, iid, rating

:return:

'''

self.dataset = pd.DataFrame(dataset)

self.users_ratings = dataset.groupby(self.columns[0]).agg([list])[[self.columns[1], self.columns[2]]]

self.items_ratings = dataset.groupby(self.columns[1]).agg([list])[[self.columns[0], self.columns[2]]]

self.globalMean = self.dataset[self.columns[2]].mean()

self.P, self.Q = self.sgd()

def _init_matrix(self):

'''

初始化P和Q矩阵,同时为设置0,1之间的随机值作为初始值

:return:

'''

# User-LF

P = dict(zip(

self.users_ratings.index,

np.random.rand(len(self.users_ratings), self.number_LatentFactors).astype(np.float32)

))

# Item-LF

Q = dict(zip(

self.items_ratings.index,

np.random.rand(len(self.items_ratings), self.number_LatentFactors).astype(np.float32)

))

return P, Q

def sgd(self):

'''

使用随机梯度下降,优化结果

:return:

'''

P, Q = self._init_matrix()

for i in range(self.number_epochs):

print("iter%d"%i)

error_list = []

for uid, iid, r_ui in self.dataset.itertuples(index=False):

# User-LF P

## Item-LF Q

v_pu = P[uid] #用户向量

v_qi = Q[iid] #物品向量

err = np.float32(r_ui - np.dot(v_pu, v_qi))

v_pu += self.alpha * (err * v_qi - self.reg_p * v_pu)

v_qi += self.alpha * (err * v_pu - self.reg_q * v_qi)

P[uid] = v_pu

Q[iid] = v_qi

# for k in range(self.number_of_LatentFactors):

# v_pu[k] += self.alpha*(err*v_qi[k] - self.reg_p*v_pu[k])

# v_qi[k] += self.alpha*(err*v_pu[k] - self.reg_q*v_qi[k])

error_list.append(err ** 2)

print(np.sqrt(np.mean(error_list)))

return P, Q

def predict(self, uid, iid):

# 如果uid或iid不在,我们使用全剧平均分作为预测结果返回

if uid not in self.users_ratings.index or iid not in self.items_ratings.index:

return self.globalMean

p_u = self.P[uid]

q_i = self.Q[iid]

return np.dot(p_u, q_i)

def test(self,testset):

'''预测测试集数据'''

for uid, iid, real_rating in testset.itertuples(index=False):

try:

pred_rating = self.predict(uid, iid)

except Exception as e:

print(e)

else:

yield uid, iid, real_rating, pred_rating

if __name__ == '__main__':

dtype = [("userId", np.int32), ("movieId", np.int32), ("rating", np.float32)]

dataset = pd.read_csv("datasets/ml-latest-small/ratings.csv", usecols=range(3), dtype=dict(dtype))

lfm = LFM(0.02, 0.01, 0.01, 10, 100, ["userId", "movieId", "rating"])

lfm.fit(dataset)

while True:

uid = input("uid: ")

iid = input("iid: ")

print(lfm.predict(int(uid), int(iid)))'''

LFM Model

'''

import pandas as pd

import numpy as np

# 评分预测 1-5

class LFM(object):

def __init__(self, alpha, reg_p, reg_q, number_LatentFactors=10, number_epochs=10, columns=["uid", "iid", "rating"]):

self.alpha = alpha # 学习率

self.reg_p = reg_p # P矩阵正则

self.reg_q = reg_q # Q矩阵正则

self.number_LatentFactors = number_LatentFactors # 隐式类别数量

self.number_epochs = number_epochs # 最大迭代次数

self.columns = columns

def fit(self, dataset):

'''

fit dataset

:param dataset: uid, iid, rating

:return:

'''

self.dataset = pd.DataFrame(dataset)

self.users_ratings = dataset.groupby(self.columns[0]).agg([list])[[self.columns[1], self.columns[2]]]

self.items_ratings = dataset.groupby(self.columns[1]).agg([list])[[self.columns[0], self.columns[2]]]

self.globalMean = self.dataset[self.columns[2]].mean()

self.P, self.Q = self.sgd()

def _init_matrix(self):

'''

初始化P和Q矩阵,同时为设置0,1之间的随机值作为初始值

:return:

'''

# User-LF

P = dict(zip(

self.users_ratings.index,

np.random.rand(len(self.users_ratings), self.number_LatentFactors).astype(np.float32)

))

# Item-LF

Q = dict(zip(

self.items_ratings.index,

np.random.rand(len(self.items_ratings), self.number_LatentFactors).astype(np.float32)

))

return P, Q

def sgd(self):

'''

使用随机梯度下降,优化结果

:return:

'''

P, Q = self._init_matrix()

for i in range(self.number_epochs):

print("iter%d"%i)

error_list = []

for uid, iid, r_ui in self.dataset.itertuples(index=False):

# User-LF P

## Item-LF Q

v_pu = P[uid] #用户向量

v_qi = Q[iid] #物品向量

err = np.float32(r_ui - np.dot(v_pu, v_qi))

v_pu += self.alpha * (err * v_qi - self.reg_p * v_pu)

v_qi += self.alpha * (err * v_pu - self.reg_q * v_qi)

P[uid] = v_pu

Q[iid] = v_qi

# for k in range(self.number_of_LatentFactors):

# v_pu[k] += self.alpha*(err*v_qi[k] - self.reg_p*v_pu[k])

# v_qi[k] += self.alpha*(err*v_pu[k] - self.reg_q*v_qi[k])

error_list.append(err ** 2)

print(np.sqrt(np.mean(error_list)))

return P, Q

def predict(self, uid, iid):

# 如果uid或iid不在,我们使用全剧平均分作为预测结果返回

if uid not in self.users_ratings.index or iid not in self.items_ratings.index:

return self.globalMean

p_u = self.P[uid]

q_i = self.Q[iid]

return np.dot(p_u, q_i)

def test(self,testset):

'''预测测试集数据'''

for uid, iid, real_rating in testset.itertuples(index=False):

try:

pred_rating = self.predict(uid, iid)

except Exception as e:

print(e)

else:

yield uid, iid, real_rating, pred_rating

if __name__ == '__main__':

dtype = [("userId", np.int32), ("movieId", np.int32), ("rating", np.float32)]

dataset = pd.read_csv("datasets/ml-latest-small/ratings.csv", usecols=range(3), dtype=dict(dtype))

lfm = LFM(0.02, 0.01, 0.01, 10, 100, ["userId", "movieId", "rating"])

lfm.fit(dataset)

while True:

uid = input("uid: ")

iid = input("iid: ")

print(lfm.predict(int(uid), int(iid)))基于矩阵分解的 CF 算法实现(二):BiasSvd

BiasSvd 其实就是前面提到的 Funk SVD 矩阵分解基础上加上了偏置项。

BiasSvd

利用 BiasSvd 预测用户对物品的评分,

损失函数

同样对于评分预测我们利用平方差来构建损失函数:

加入 L2 正则化:

对损失函数求偏导:

随机梯度下降法优化

梯度下降更新参数

同理:

随机梯度下降:

由于 P 矩阵和 Q 矩阵是两个不同的矩阵,通常分别采取不同的正则参数,如

算法实现

Click me to view the code

'''

BiasSvd Model

'''

import math

import random

import pandas as pd

import numpy as np

class BiasSvd(object):

def __init__(self, alpha, reg_p, reg_q, reg_bu, reg_bi, number_LatentFactors=10, number_epochs=10, columns=["uid", "iid", "rating"]):

self.alpha = alpha # 学习率

self.reg_p = reg_p

self.reg_q = reg_q

self.reg_bu = reg_bu

self.reg_bi = reg_bi

self.number_LatentFactors = number_LatentFactors # 隐式类别数量

self.number_epochs = number_epochs

self.columns = columns

def fit(self, dataset):

'''

fit dataset

:param dataset: uid, iid, rating

:return:

'''

self.dataset = pd.DataFrame(dataset)

self.users_ratings = dataset.groupby(self.columns[0]).agg([list])[[self.columns[1], self.columns[2]]]

self.items_ratings = dataset.groupby(self.columns[1]).agg([list])[[self.columns[0], self.columns[2]]]

self.globalMean = self.dataset[self.columns[2]].mean()

self.P, self.Q, self.bu, self.bi = self.sgd()

def _init_matrix(self):

'''

初始化P和Q矩阵,同时为设置0,1之间的随机值作为初始值

:return:

'''

# User-LF

P = dict(zip(

self.users_ratings.index,

np.random.rand(len(self.users_ratings), self.number_LatentFactors).astype(np.float32)

))

# Item-LF

Q = dict(zip(

self.items_ratings.index,

np.random.rand(len(self.items_ratings), self.number_LatentFactors).astype(np.float32)

))

return P, Q

def sgd(self):

'''

使用随机梯度下降,优化结果

:return:

'''

P, Q = self._init_matrix()

# 初始化bu、bi的值,全部设为0

bu = dict(zip(self.users_ratings.index, np.zeros(len(self.users_ratings))))

bi = dict(zip(self.items_ratings.index, np.zeros(len(self.items_ratings))))

for i in range(self.number_epochs):

print("iter%d"%i)

error_list = []

for uid, iid, r_ui in self.dataset.itertuples(index=False):

v_pu = P[uid]

v_qi = Q[iid]

err = np.float32(r_ui - self.globalMean - bu[uid] - bi[iid] - np.dot(v_pu, v_qi))

v_pu += self.alpha * (err * v_qi - self.reg_p * v_pu)

v_qi += self.alpha * (err * v_pu - self.reg_q * v_qi)

P[uid] = v_pu

Q[iid] = v_qi

bu[uid] += self.alpha * (err - self.reg_bu * bu[uid])

bi[iid] += self.alpha * (err - self.reg_bi * bi[iid])

error_list.append(err ** 2)

print(np.sqrt(np.mean(error_list)))

return P, Q, bu, bi

def predict(self, uid, iid):

if uid not in self.users_ratings.index or iid not in self.items_ratings.index:

return self.globalMean

p_u = self.P[uid]

q_i = self.Q[iid]

return self.globalMean + self.bu[uid] + self.bi[iid] + np.dot(p_u, q_i)

if __name__ == '__main__':

dtype = [("userId", np.int32), ("movieId", np.int32), ("rating", np.float32)]

dataset = pd.read_csv("datasets/ml-latest-small/ratings.csv", usecols=range(3), dtype=dict(dtype))

bsvd = BiasSvd(0.02, 0.01, 0.01, 0.01, 0.01, 10, 20)

bsvd.fit(dataset)

while True:

uid = input("uid: ")

iid = input("iid: ")

print(bsvd.predict(int(uid), int(iid)))'''

BiasSvd Model

'''

import math

import random

import pandas as pd

import numpy as np

class BiasSvd(object):

def __init__(self, alpha, reg_p, reg_q, reg_bu, reg_bi, number_LatentFactors=10, number_epochs=10, columns=["uid", "iid", "rating"]):

self.alpha = alpha # 学习率

self.reg_p = reg_p

self.reg_q = reg_q

self.reg_bu = reg_bu

self.reg_bi = reg_bi

self.number_LatentFactors = number_LatentFactors # 隐式类别数量

self.number_epochs = number_epochs

self.columns = columns

def fit(self, dataset):

'''

fit dataset

:param dataset: uid, iid, rating

:return:

'''

self.dataset = pd.DataFrame(dataset)

self.users_ratings = dataset.groupby(self.columns[0]).agg([list])[[self.columns[1], self.columns[2]]]

self.items_ratings = dataset.groupby(self.columns[1]).agg([list])[[self.columns[0], self.columns[2]]]

self.globalMean = self.dataset[self.columns[2]].mean()

self.P, self.Q, self.bu, self.bi = self.sgd()

def _init_matrix(self):

'''

初始化P和Q矩阵,同时为设置0,1之间的随机值作为初始值

:return:

'''

# User-LF

P = dict(zip(

self.users_ratings.index,

np.random.rand(len(self.users_ratings), self.number_LatentFactors).astype(np.float32)

))

# Item-LF

Q = dict(zip(

self.items_ratings.index,

np.random.rand(len(self.items_ratings), self.number_LatentFactors).astype(np.float32)

))

return P, Q

def sgd(self):

'''

使用随机梯度下降,优化结果

:return:

'''

P, Q = self._init_matrix()

# 初始化bu、bi的值,全部设为0

bu = dict(zip(self.users_ratings.index, np.zeros(len(self.users_ratings))))

bi = dict(zip(self.items_ratings.index, np.zeros(len(self.items_ratings))))

for i in range(self.number_epochs):

print("iter%d"%i)

error_list = []

for uid, iid, r_ui in self.dataset.itertuples(index=False):

v_pu = P[uid]

v_qi = Q[iid]

err = np.float32(r_ui - self.globalMean - bu[uid] - bi[iid] - np.dot(v_pu, v_qi))

v_pu += self.alpha * (err * v_qi - self.reg_p * v_pu)

v_qi += self.alpha * (err * v_pu - self.reg_q * v_qi)

P[uid] = v_pu

Q[iid] = v_qi

bu[uid] += self.alpha * (err - self.reg_bu * bu[uid])

bi[iid] += self.alpha * (err - self.reg_bi * bi[iid])

error_list.append(err ** 2)

print(np.sqrt(np.mean(error_list)))

return P, Q, bu, bi

def predict(self, uid, iid):

if uid not in self.users_ratings.index or iid not in self.items_ratings.index:

return self.globalMean

p_u = self.P[uid]

q_i = self.Q[iid]

return self.globalMean + self.bu[uid] + self.bi[iid] + np.dot(p_u, q_i)

if __name__ == '__main__':

dtype = [("userId", np.int32), ("movieId", np.int32), ("rating", np.float32)]

dataset = pd.read_csv("datasets/ml-latest-small/ratings.csv", usecols=range(3), dtype=dict(dtype))

bsvd = BiasSvd(0.02, 0.01, 0.01, 0.01, 0.01, 10, 20)

bsvd.fit(dataset)

while True:

uid = input("uid: ")

iid = input("iid: ")

print(bsvd.predict(int(uid), int(iid)))